✅ Introduction & Overview

What is Test Data Management (TDM)?

Test Data Management (TDM) is the practice of creating, managing, and provisioning test data for application development, testing, and deployment. In DevSecOps, TDM ensures secure, compliant, and efficient test data usage throughout the CI/CD pipeline.

History & Background

- Manual Era: Developers manually created test data, leading to poor test coverage.

- Early Automation: Tools emerged to copy production data for testing—raising privacy concerns.

- Modern TDM: Automated, compliant, and integrated with CI/CD pipelines to support DevSecOps.

Why is TDM Relevant in DevSecOps?

- Enables security and compliance testing using sanitized data.

- Supports automation across build, test, and release workflows.

- Enhances shift-left testing by ensuring early access to valid test data.

- Reduces risk of data breaches and regulatory non-compliance (e.g., GDPR, HIPAA).

🧩 Core Concepts & Terminology

Key Terms

| Term | Definition |

|---|---|

| Test Data | Structured/unstructured data used to verify software behavior. |

| Data Masking | Obscuring sensitive data to protect privacy. |

| Data Subsetting | Creating a smaller, representative data sample. |

| Synthetic Data | Artificially generated data mimicking production datasets. |

| Compliance | Adhering to legal and regulatory data usage standards. |

DevSecOps Lifecycle Integration

TDM spans across:

- 🧪 Continuous Testing: Ensures realistic test environments.

- 🔐 Security Validation: Validates security policies with masked data.

- 🚀 Deployment Pipelines: Integrates data provisioning in CI/CD.

- 📊 Monitoring & Feedback: Validates post-deployment using logs/data.

🏗️ Architecture & How It Works

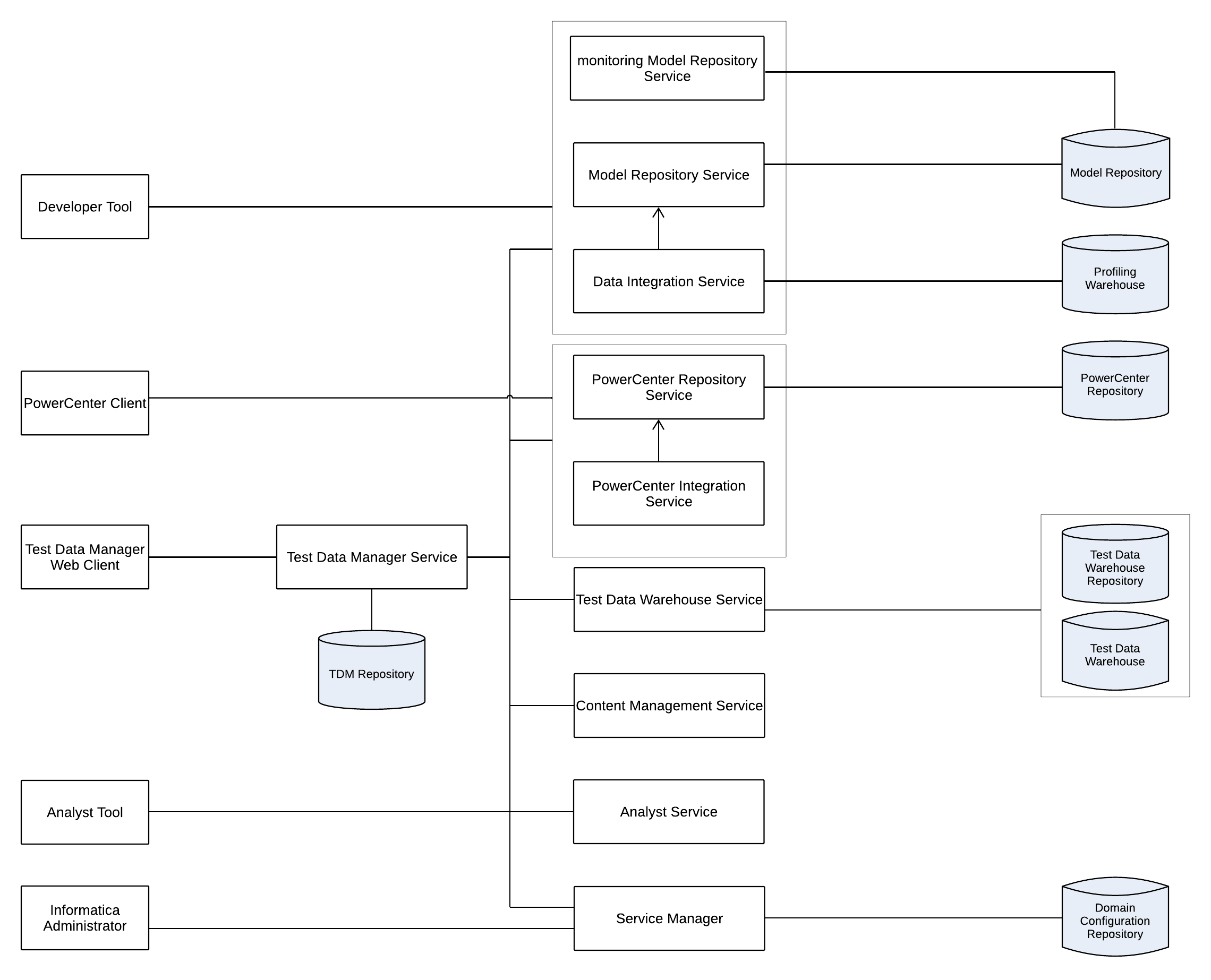

Components

- Data Sources: Production DBs, APIs, files, etc.

- TDM Engine:

- Extract, Mask, Subset, Generate

- Storage: Secure test data repositories

- Provisioning Tools: Scripts, APIs, or integrations

- Security Layer: Role-based access, auditing

- CI/CD Integrator: Jenkins, GitLab, GitHub Actions, etc.

Internal Workflow

flowchart LR

A[Production Data] --> B{TDM Engine}

B --> C[Data Masking]

B --> D[Synthetic Generation]

B --> E[Subsetting]

C --> F[Secure Test Data]

D --> F

E --> F

F --> G[Dev/Test Environments]

Integration Points with CI/CD or Cloud

| Tool | Integration Example |

|---|---|

| Jenkins | Post-build step to provision masked test data |

| GitHub Actions | Workflow job to trigger synthetic data gen |

| AWS | Use RDS snapshots + masking in AWS Lambda |

| Azure DevOps | Pipelines to run scripts against cloned DBs |

⚙️ Installation & Getting Started

Prerequisites

- Python 3.8+, Docker (optional)

- Access to a sample or cloned production database

- Admin privileges for data masking tools

Step-by-Step: Basic TDM with Mockaroo + Faker (Python)

1. Install Dependencies

pip install Faker pandas

2. Sample Script for Synthetic Data

from faker import Faker

import pandas as pd

fake = Faker()

data = [{"name": fake.name(), "email": fake.email(), "ssn": fake.ssn()} for _ in range(10)]

df = pd.DataFrame(data)

df.to_csv("synthetic_users.csv", index=False)

3. Integration in Jenkins Pipeline

stage('Generate Test Data') {

steps {

sh 'python3 scripts/generate_synthetic_data.py'

}

}

💼 Real-World Use Cases

1. Banking & Financial Sector

- Problem: Cannot use real customer data due to GDPR/PCI-DSS.

- Solution: Masked production data + synthetic transactions.

- Tools: Delphix, Broadcom TDM, IBM InfoSphere.

2. Healthcare Applications

- Scenario: HIPAA-compliant synthetic patient records for testing EHR platforms.

- Solution: Generate realistic HL7/FHIR structured data using TDM tools.

3. E-Commerce Platforms

- Problem: Functional and load testing with realistic SKU, customer, and order data.

- Solution: Use data subsetting to create manageable yet representative datasets.

4. Cloud-Native DevSecOps

- Scenario: Terraform + TDM in CI/CD to auto-provision sanitized test DBs on AWS/GCP.

- Integration: Jenkins + AWS Lambda + TDM APIs.

✅ Benefits & Limitations

Key Benefits

- ✅ Reduces test environment setup time

- ✅ Enhances security by removing sensitive data

- ✅ Supports shift-left and continuous testing

- ✅ Facilitates regulatory compliance

Limitations

| Challenge | Description |

|---|---|

| 🚫 Complexity | Setup and orchestration can be complex |

| ⏳ Performance | Large datasets slow down pipelines |

| 💰 Cost | Commercial TDM tools can be expensive |

| 🔐 Data Risk | Poor masking can expose sensitive info |

🛠️ Best Practices & Recommendations

Security & Compliance

- Use dynamic data masking and tokenization

- Enforce RBAC and audit logs

- Align with GDPR, HIPAA, PCI-DSS standards

Automation & Performance

- Automate TDM workflows using CI/CD pipelines

- Use data subsetting to reduce load times

- Clean up unused test datasets regularly

Maintenance & Monitoring

- Periodically refresh masked data

- Store synthetic data schemas in version control

- Integrate alerts for test data failures

🔁 Comparison with Alternatives

| Approach | Description | Pros | Cons |

|---|---|---|---|

| TDM | Full lifecycle test data mgmt | Secure, automated | Setup overhead |

| Manual Data | Hand-created test sets | Simple | Low coverage, not secure |

| Prod Clone | Full copy of prod data | Realistic | High risk, non-compliant |

| Mocking Services | API-level mocking | Fast, stateless | Limited logic coverage |

When to Choose TDM?

Use TDM when:

- Regulatory compliance is mandatory.

- Multiple teams need reliable test environments.

- CI/CD automation and data fidelity are critical.

🔚 Conclusion

Test Data Management is a foundational element in secure, scalable DevSecOps pipelines. It not only enhances testing but ensures privacy, compliance, and reliability across the software lifecycle.

As DevSecOps matures, expect:

- AI-generated test datasets

- Tighter TDM integration with IaC tools

- Improved open-source ecosystem

📚 Resources & Communities

- 🔗 Delphix TDM

- 🔗 Broadcom Test Data Manager

- 🔗 Mockaroo (synthetic data)

- 🔗 TDM Community on Stack Overflow

Category: