1. Introduction & Overview

Apache Airflow has become a staple in modern data orchestration and workflow automation. In the DevSecOps paradigm, where automation, traceability, and security are critical, Airflow offers capabilities that make it a natural fit for orchestrating security controls, CI/CD tasks, compliance workflows, and more.

What is Apache Airflow?

Apache Airflow is an open-source platform to programmatically author, schedule, and monitor workflows using Directed Acyclic Graphs (DAGs). It allows for modular, dynamic, and version-controlled workflow management.

History or Background

- Created by: Airbnb in 2014

- Open-sourced: 2015 under the Apache 2.0 license

- Apache Incubation: 2016

- Top-Level Apache Project: 2019

Why is It Relevant in DevSecOps?

DevSecOps integrates security at every stage of the development lifecycle. Apache Airflow helps by:

- Automating vulnerability scans, code checks, and security audits

- Enabling secure, trackable task orchestration

- Supporting reproducibility, audit logging, and compliance enforcement

- Integrating with CI/CD and cloud-native tools

2. Core Concepts & Terminology

Key Terms and Definitions

| Term | Definition |

|---|---|

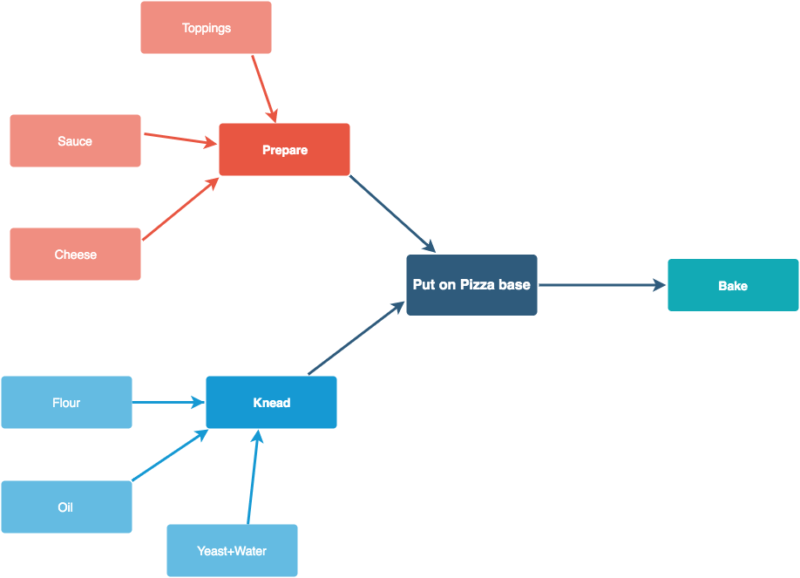

| DAG | Directed Acyclic Graph — a collection of tasks with defined dependencies and execution order. |

| Operator | A single task definition (e.g., BashOperator, PythonOperator, DockerOperator). |

| Task | An instance of an operator. |

| Scheduler | Monitors DAGs and triggers task instances based on schedule or triggers. |

| Executor | Determines how and where tasks are run (e.g., LocalExecutor, CeleryExecutor). |

| XCom | Cross-communication mechanism for tasks to share data. |

How It Fits into the DevSecOps Lifecycle

| DevSecOps Stage | Apache Airflow Role |

|---|---|

| Plan | Automate compliance data extraction and analysis |

| Develop | Trigger static code analysis tools (e.g., SonarQube) |

| Build | Automate artifact scanning and SBOM generation |

| Test | Schedule DAST tools (e.g., OWASP ZAP) |

| Release | Ensure pre-release security checks via pipelines |

| Deploy | Orchestrate canary/security-aware deployments |

| Operate | Schedule periodic vulnerability scans |

| Monitor | Integrate with alerting and SIEM tools |

3. Architecture & How It Works

Core Components

- Scheduler: Watches DAGs, triggers tasks

- Web Server: UI for DAG monitoring and control

- Metadata Database: Stores state, task runs, logs

- Executor: Executes tasks (Celery, Kubernetes, etc.)

- Workers: Actual runtime for executing tasks

- DAG Definition Files: Python code defining workflows

Internal Workflow

- Scheduler loads DAGs from Python files

- Schedules tasks based on the DAG definition

- Tasks are queued and run via Executor

- Results and logs are stored in the metadata DB

- UI and CLI provide observability and control

Architecture Diagram (Descriptive)

+-------------+ +------------+ +-------------+

| Scheduler | <----> | Metadata | <----> | Web Server |

+-------------+ | Database | +-------------+

| | |

v v v

+-------------+ +-----------------+ +------------------+

| Executor | --> | Worker Pool | --> | Task Execution |

+-------------+ +-----------------+ +------------------+

Integration Points with CI/CD and Cloud

- GitHub Actions / GitLab CI: Trigger Airflow DAGs using APIs

- KubernetesExecutor: Native support for running tasks in k8s

- Vault: Secure secret retrieval

- Cloud Providers: AWS Lambda, GCP Cloud Functions, Azure Logic Apps

- Security Tools: Integrate with tools like Trivy, Snyk, Gitleaks

4. Installation & Getting Started

Basic Setup or Prerequisites

- Python 3.8+

- Postgres/MySQL (for metadata)

- Docker (optional, for containerized deployment)

Hands-on: Beginner Setup Using Docker Compose

# Clone the official Airflow Docker repo

git clone https://github.com/apache/airflow.git

cd airflow

# Create Airflow directories

mkdir -p ./dags ./logs ./plugins

# Initialize environment

echo -e "AIRFLOW_UID=$(id -u)" > .env

# Launch

docker-compose up airflow-init

docker-compose up

- Access Web UI: http://localhost:8080

- Default creds:

admin / admin

Create a Sample DAG for Secret Scanning

from airflow import DAG

from airflow.operators.bash import BashOperator

from datetime import datetime

with DAG('secret_scan',

start_date=datetime(2023, 1, 1),

schedule_interval='@daily',

catchup=False) as dag:

scan_code = BashOperator(

task_id='run_gitleaks',

bash_command='gitleaks detect --source /path/to/code'

)

5. Real-World Use Cases in DevSecOps

1. Automated Code Secret Scanning

- Run Gitleaks every night on new pull requests

- Push results to a security dashboard

2. Vulnerability Management Orchestration

- Schedule container scanning using Trivy or Grype

- Push CVEs into JIRA for tracking

3. DAST + SAST Hybrid Pipelines

- Run SonarQube and OWASP ZAP in a pipeline

- Use

BranchSensoroperator to trigger only on main branches

4. Compliance Check Scheduling

- Automate weekly CIS Benchmark scans

- Email results to InfoSec and DevOps leads

6. Benefits & Limitations

Key Advantages

- Modular and extensible DAGs

- Rich plugin ecosystem

- Cloud-native executor options (Kubernetes, Celery)

- Native integration with secrets managers

- API and CLI-friendly for DevSecOps automation

Common Limitations

| Limitation | Description |

|---|---|

| Complexity | Steep learning curve for non-developers |

| Latency | Not real-time; meant for batch task orchestration |

| Resource Consumption | CeleryExecutor setups can be heavy |

| Security | Requires strict hardening for production use |

7. Best Practices & Recommendations

Security Tips

- Use RBAC (Role-Based Access Control)

- Store secrets in Vault or AWS Secrets Manager

- Enable TLS/SSL on web interface and APIs

Performance & Maintenance

- Archive metadata regularly

- Use KubernetesExecutor for horizontal scaling

- Enable task retry policies

Compliance Alignment

- Track logs for auditability

- Integrate with SIEM tools (e.g., Splunk)

- Use Airflow to document and execute policy-as-code

Automation Ideas

- Automatically rotate keys via Airflow DAG

- Use DAGs for SBOM verification pipelines

- Auto-tag scanned Docker images based on CVE score

8. Comparison with Alternatives

| Feature / Tool | Airflow | Argo Workflows | Jenkins Pipelines | Prefect |

|---|---|---|---|---|

| DAG Support | Yes | Yes | No (pipeline stages) | Yes |

| UI & Observability | Rich UI | Minimal | Moderate | Modern UI |

| Extensibility | High | High (k8s-native) | High | High |

| Security Focus | Medium | Medium | Low | Medium |

When to Choose Apache Airflow

- You need complex workflows with many dependencies

- You want pluggable, reusable pipelines with strong monitoring

- You require fine-grained scheduling and backfilling

- You want to automate security + compliance tasks alongside DevOps

9. Conclusion

Apache Airflow is a powerful workflow orchestration tool that, when integrated thoughtfully into the DevSecOps pipeline, enhances automation, improves visibility, and strengthens security postures. Its flexibility and broad ecosystem make it ideal for use cases ranging from vulnerability management to compliance automation.

Next Steps

- Try building your first DevSecOps DAG

- Integrate Airflow with your CI/CD pipelines

- Contribute plugins or operators for security tools

Official Resources

- Docs: https://airflow.apache.org/docs

- GitHub: https://github.com/apache/airflow

- Slack: https://apache-airflow.slack.com

- Community: https://airflow.apache.org/community