Introduction & Overview

Containerization, specifically with Docker, has become a cornerstone technology in modern DataOps practices, enabling teams to streamline data pipelines, enhance scalability, and ensure consistency across environments. This tutorial provides an in-depth exploration of Docker in the context of DataOps, covering its core concepts, setup, real-world applications, benefits, limitations, and best practices.

What is Containerization Docker?

Containerization is a lightweight virtualization technology that allows applications and their dependencies to be packaged into standardized, isolated units called containers. Docker is the leading platform for containerization, providing tools to create, deploy, and manage containers efficiently.

- Definition: Containers encapsulate an application, its libraries, dependencies, and configuration files, ensuring consistent execution across different environments.

- Key Characteristics:

- Portable: Run on any system with Docker installed.

- Lightweight: Use the host OS kernel, reducing overhead compared to virtual machines.

- Isolated: Containers run independently, avoiding conflicts between applications.

History or Background

Docker was first released in 2013 by Solomon Hykes as an open-source project, building on existing Linux container technologies like LXC. It popularized containerization by simplifying the creation and management of containers through a user-friendly CLI and standardized image formats.

- Milestones:

- 2013: Docker open-sourced at PyCon.

- 2014: Docker 1.0 released, gaining enterprise adoption.

- 2015–Present: Integration with Kubernetes, CI/CD pipelines, and cloud platforms.

- 2008: Linux cgroups (control groups) introduced by Google → foundation for containerization.

- 2013: Docker released, making container technology accessible and developer-friendly.

- 2015–2020: Docker becomes the standard in DevOps & DataOps workflows. Kubernetes emerges for orchestration.

- Now: Docker is widely used in CI/CD pipelines, cloud-native applications, and DataOps environments.

Why is it Relevant in DataOps?

DataOps is a methodology that applies agile and DevOps principles to data management, emphasizing collaboration, automation, and continuous delivery of data pipelines. Docker’s relevance in DataOps stems from its ability to:

- Ensure Consistency: Containers provide reproducible environments for data processing, testing, and deployment.

- Enable Scalability: Containers support distributed data pipelines, integrating seamlessly with orchestration tools like Kubernetes.

- Accelerate Development: Data scientists and engineers can iterate quickly in isolated environments.

- Support CI/CD: Containers integrate with CI/CD pipelines, enabling automated testing and deployment of data workflows.

Core Concepts & Terminology

Key Terms and Definitions

- Docker Image: A read-only template containing the application, dependencies, and configurations.

- Container: A running instance of a Docker image.

- Dockerfile: A script defining the steps to build a Docker image.

- Docker Hub: A registry for sharing and storing Docker images.

- Container Orchestration: Tools like Kubernetes or Docker Swarm that manage multiple containers across clusters.

- DataOps Lifecycle: The stages of data management, including ingestion, processing, analysis, and delivery.

| Term | Definition | Example in DataOps |

|---|---|---|

| Container | Lightweight runtime unit that contains application + dependencies | Running a data ingestion script in Python with exact dependencies |

| Image | Blueprint of a container (read-only template) | python:3.11-slim used for ETL jobs |

| Dockerfile | Script defining how to build an image | Installing Pandas & PySpark in a custom image |

| Registry | Repository of images (public/private) | Docker Hub, AWS ECR, GCP Artifact Registry |

| Volume | Persistent storage for containers | Storing raw datasets or logs outside container lifecycle |

| Network | Virtual communication layer for containers | Connecting Airflow scheduler with workers |

How It Fits into the DataOps Lifecycle

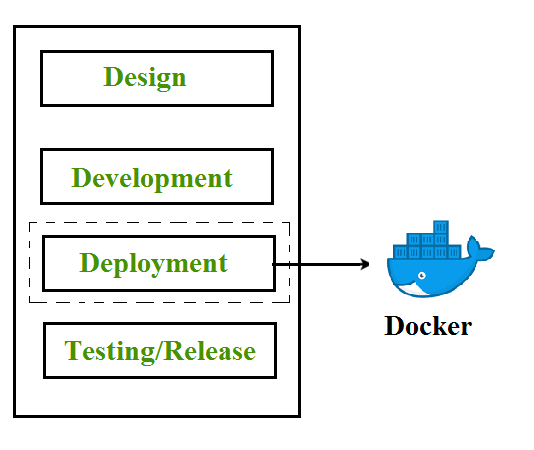

Docker supports various stages of the DataOps lifecycle:

- Data Ingestion: Containers can run ETL (Extract, Transform, Load) tools like Apache Airflow or NiFi.

- Processing & Analysis: Data scientists can use containers to run Python, R, or Spark environments consistently.

- Testing & Validation: Containers enable isolated testing of data pipelines without affecting production.

- Deployment: Containers ensure that data applications deploy reliably across development, staging, and production environments.

Architecture & How It Works

Components & Internal Workflow

Docker’s architecture consists of several key components:

- Docker Engine: The runtime that builds and runs containers, consisting of:

- Docker Daemon: Manages containers, images, and networking.

- Docker CLI: The command-line interface for interacting with the daemon.

- REST API: Enables programmatic control of Docker.

- Images: Layered, immutable files created from a Dockerfile.

- Containers: Lightweight, isolated environments created from images.

- Registries: Repositories (e.g., Docker Hub) for storing and distributing images.

- Networking: Docker provides networking modes (bridge, host, overlay) for container communication.

Workflow:

- Write a Dockerfile specifying the application and dependencies.

- Build an image using

docker build. - Push the image to a registry (e.g., Docker Hub).

- Pull and run the image as a container using

docker run.

Architecture Diagram Description

Since images cannot be included, imagine a diagram with:

- A central Docker Engine box, containing the Docker Daemon and REST API.

- A Docker CLI arrow interacting with the Daemon.

- A Docker Hub cloud connected to the Engine for image storage.

- Multiple Containers running on the Engine, each with isolated applications (e.g., Python, Spark).

- A Network layer connecting containers to each other and external services.

[Developer/CI] ---> [Dockerfile] ---> [Docker Engine] ---> [Image Registry]

| |

v v

[Container Build] ------------------> [Running Containers]

|

[Data Pipelines / ML Jobs]Integration Points with CI/CD or Cloud Tools

- CI/CD: Docker integrates with tools like Jenkins, GitHub Actions, or GitLab CI to automate building, testing, and deploying containers.

- Example: A Jenkins pipeline builds a Docker image, runs tests in a container, and deploys to Kubernetes.

- Cloud Tools: Docker works with AWS ECS, Azure Container Instances, and Google Kubernetes Engine for scalable deployments.

- DataOps Tools: Containers can run Airflow, Kafka, or Spark, integrating with cloud-native services like AWS S3 or Google BigQuery.

Installation & Getting Started

Basic Setup & Prerequisites

To get started with Docker:

- System Requirements:

- Linux, macOS, or Windows (with WSL2 for Windows).

- Minimum 4GB RAM, 20GB disk space.

- Prerequisites:

- Install Docker Desktop (macOS/Windows) or Docker Engine (Linux).

- Basic knowledge of command-line operations.

- Optional: A Docker Hub account for sharing images.

Hands-On: Step-by-Step Setup Guide

This guide sets up Docker and runs a simple Python-based data processing container.

- Install Docker:

- Download and install Docker Desktop from docker.com.

- Verify installation:

docker --versionExpected output: Docker version 20.x.x, build xxxxxxx.

2. Create a Dockerfile:

Create a file named Dockerfile:

FROM python:3.9-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY script.py .

CMD ["python", "script.py"]3. Create a Python Script:

Create script.py for a simple data processing task:

import pandas as pd

print("Processing data...")

df = pd.DataFrame({'col1': [1, 2, 3], 'col2': ['a', 'b', 'c']})

print(df)4. Create Requirements File:

Create requirements.txt:

pandas==1.5.35. Build the Docker Image:

docker build -t dataops-example .6. Run the Container:

docker run dataops-exampleExpected output:

Processing data...

col1 col2

0 1 a

1 2 b

2 3 c7. Push to Docker Hub (Optional):

docker tag dataops-example yourusername/dataops-example

docker push yourusername/dataops-exampleReal-World Use Cases

Scenario 1: Data Pipeline Automation

A financial company uses Docker to run Apache Airflow for orchestrating ETL pipelines. Each task (e.g., data extraction, transformation) runs in a separate container, ensuring consistent environments and easy scaling on Kubernetes.

Scenario 2: Machine Learning Model Development

A data science team containerizes Jupyter Notebooks with specific Python versions and libraries (e.g., TensorFlow, scikit-learn). This allows reproducible experiments across team members and deployment to production.

Scenario 3: Real-Time Data Processing

A retail company uses Docker to deploy Apache Kafka and Spark containers for real-time inventory analytics. Containers ensure that the same configurations are used in development and production.

Industry-Specific Example: Healthcare

Hospitals use Docker to containerize HIPAA-compliant data processing pipelines, ensuring isolation and security for patient data. Containers run Spark jobs to analyze medical records, integrating with AWS Redshift for storage.

Benefits & Limitations

Key Advantages

- Portability: Run containers consistently across development, testing, and production.

- Efficiency: Containers are lightweight, using fewer resources than VMs.

- Modularity: Break down complex data pipelines into manageable containers.

- Ecosystem: Rich integration with CI/CD, cloud platforms, and DataOps tools.

Common Challenges & Limitations

- Learning Curve: Requires understanding of Dockerfiles, networking, and orchestration.

- Security Risks: Misconfigured containers can expose vulnerabilities.

- Storage Management: Containers are stateless by default, requiring external storage solutions for persistent data.

- Resource Overhead: Running multiple containers can strain system resources.

Comparison Table

| Feature | Docker (Containers) | Virtual Machines | Kubernetes (Orchestration) |

|---|---|---|---|

| Resource Usage | Lightweight | Heavy | Lightweight (manages containers) |

| Startup Time | Seconds | Minutes | Seconds |

| Isolation | Process-level | Full OS | Container-level |

| DataOps Use Case | ETL, ML pipelines | Legacy apps | Scalable pipelines |

Best Practices & Recommendations

Security Tips

- Use minimal base images (e.g.,

python:sliminstead ofpython). - Regularly update images and scan for vulnerabilities using

docker scan. - Avoid running containers as root: Use

USERin Dockerfile.

Performance

- Optimize image layers by combining commands in Dockerfile.

- Use multi-stage builds to reduce image size.

- Leverage caching for faster builds.

Maintenance

- Clean up unused images and containers with

docker system prune. - Monitor container performance with tools like Prometheus or Grafana.

Compliance Alignment

- Ensure containers meet compliance standards (e.g., HIPAA, GDPR) by using trusted images and secure configurations.

- Implement role-based access control (RBAC) for Docker Hub and registries.

Automation Ideas

- Automate image builds in CI/CD pipelines using GitHub Actions or Jenkins.

- Use Docker Compose for multi-container DataOps workflows.

Comparison with Alternatives

Alternatives to Docker

- Podman: A daemonless container engine, compatible with Docker images, ideal for security-conscious environments.

- Kubernetes: While not a direct alternative, Kubernetes orchestrates containers and is often used with Docker.

- Virtual Machines: Provide stronger isolation but are resource-intensive.

When to Choose Docker

- Choose Docker for lightweight, portable, and consistent environments in DataOps pipelines.

- Choose Podman for rootless, daemonless container management.

- Choose VMs for legacy applications requiring full OS isolation.

- Choose Kubernetes for orchestrating large-scale containerized applications.

Conclusion

Docker is a transformative technology in DataOps, enabling consistent, scalable, and automated data pipelines. Its ability to integrate with CI/CD, cloud platforms, and DataOps tools makes it indispensable for modern data teams. As containerization evolves, trends like serverless containers and AI-driven orchestration will further enhance its role.

Next Steps:

- Explore Docker Compose for multi-container setups.

- Experiment with Kubernetes for orchestration.

- Join the Docker Community for support.

Resources:

- Official Docker Documentation

- Docker Hub

- DataOps Manifesto