Introduction & Overview

What is Prefect?

Prefect is an open-source workflow orchestration tool designed to simplify the creation, scheduling, and monitoring of data pipelines. It allows data engineers and scientists to define workflows as Python code, offering a Python-native experience without requiring domain-specific languages (DSLs) or complex configuration files. Prefect automates critical DataOps tasks such as dependency management, failure handling, and observability, making it a powerful tool for building resilient and scalable data pipelines.

History or Background

Prefect was founded in 2018 by Jeremiah Lowin, a former contributor to Apache Airflow, with the goal of creating a more flexible and Pythonic orchestration tool. The initial release introduced task mapping, enabling dynamic workflows. In 2022, Prefect 2.0 removed the rigid DAG (Directed Acyclic Graph) constraint, embracing native Python control flow for greater flexibility. The 2024 release of Prefect 3.0 further enhanced performance and introduced open-sourced event-driven automation, solidifying its position in modern data stacks.

Why is it Relevant in DataOps?

DataOps emphasizes automation, collaboration, and observability in data workflows. Prefect aligns with these principles by:

- Automating Workflows: Simplifies scheduling, retries, and error handling.

- Enhancing Observability: Provides real-time monitoring via a user-friendly UI.

- Supporting Collaboration: Integrates with CI/CD pipelines and cloud platforms, enabling team-driven development.

- Ensuring Scalability: Runs workflows locally or in distributed environments like Kubernetes or cloud services.

Prefect’s Python-first approach reduces the learning curve for data teams, making it a cornerstone for DataOps practices.

Core Concepts & Terminology

Key Terms and Definitions

- Task: The smallest unit of work, a Python function decorated with

@task. Tasks handle specific operations like data extraction or transformation. - Flow: A collection of tasks organized into a workflow, defined with the

@flowdecorator. Flows manage task dependencies and execution order. - State: Tracks the status of tasks or flows (e.g., Pending, Running, Failed, Completed).

- Deployment: A server-side configuration specifying when, where, and how a flow runs.

- Work Pool/Worker: Work pools organize tasks for execution, while workers retrieve and execute scheduled runs.

- Automations: Event-driven actions triggered by conditions like task failure or completion.

- Blocks: Store reusable configurations, such as credentials for AWS or databases.

- Artifacts: Persisted outputs (e.g., tables, markdown) displayed in the Prefect UI.

| Term | Definition | Example |

|---|---|---|

| Flow | A workflow or DAG (Directed Acyclic Graph) in Prefect. | ETL pipeline from API → DB |

| Task | A single unit of work inside a flow. | Extract data from API |

| Deployment | A versioned, schedulable flow configuration. | Daily ETL job |

| Agent | Worker process that executes flows. | Kubernetes agent running jobs |

| Block | A reusable, pluggable configuration object (e.g., storage, secrets). | AWS S3 block for storing results |

| Orion | Prefect 2.0’s orchestration engine. | Handles scheduling, logs, events |

| Prefect Cloud | SaaS control plane for managing workflows at scale. | Team collaboration dashboard |

How It Fits into the DataOps Lifecycle

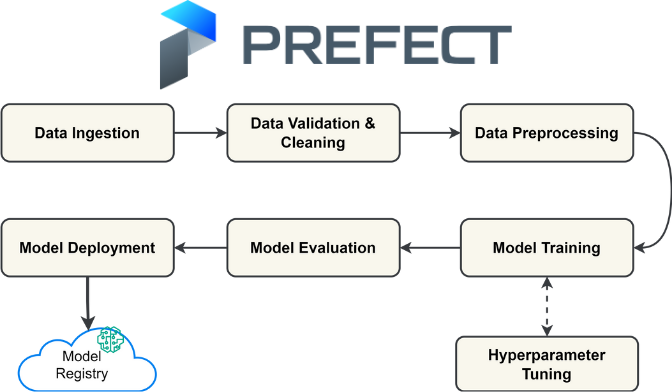

The DataOps lifecycle includes stages like data ingestion, transformation, testing, deployment, and monitoring. Prefect contributes as follows:

- Ingestion & Transformation: Tasks handle data extraction and processing.

- Testing: Supports CI/CD integration for automated testing of flows.

- Deployment: Deployments enable scheduled or event-driven execution in production.

- Monitoring: Real-time UI and logging provide insights into pipeline performance.

- Orchestration: Manages dependencies and retries, ensuring reliable execution.

Architecture & How It Works

Components & Internal Workflow

Prefect’s architecture consists of two main layers:

- Execution Layer:

- Tasks & Flows: Python functions that define the work and its orchestration.

- Agents: Polling services that fetch scheduled flow runs from work pools and execute them.

- Workers: Execute tasks within a flow, supporting local or distributed environments.

- Orchestration Layer:

- Orion API Server: Manages workflow state, scheduling, and metadata.

- Prefect UI: Provides real-time visualization of flow runs, logs, and dependency graphs.

- Database: Stores run history, states, and logs for persistence.

Workflow:

- Define tasks and flows in Python.

- Deploy flows to a work pool via the Orion API.

- Agents poll the work pool, assigning tasks to workers.

- Workers execute tasks, updating states in the database.

- The UI displays real-time status and logs.

Architecture Diagram Description

The architecture can be visualized as:

- A Client Layer where Python code defines tasks and flows.

- An Orchestration Layer with the Orion API and UI, managing scheduling and monitoring.

- An Execution Layer with agents and workers running tasks in local, cloud, or hybrid environments.

- A Storage Layer (database) for persisting states and logs.

- Arrows show bidirectional communication: code to API, API to agents/workers, and state updates back to the database/UI.

Developer (Python Code) → Prefect Orion API

↳ Deployment Scheduler → Agent → Worker (Executes tasks)

↳ Storage Blocks (S3, GCS, DB, etc.)

↳ Prefect UI/Cloud Dashboard (Monitoring, Logs, Alerts)

Integration Points with CI/CD or Cloud Tools

- CI/CD: Prefect integrates with tools like GitHub Actions or Jenkins, allowing automated testing and deployment of flows.

- Cloud Tools: Supports AWS (S3, Lambda), GCP, Azure, Kubernetes, and Docker for execution and storage.

- Data Tools: Integrates with Snowflake, Spark, Dask, and dbt for data processing.

Installation & Getting Started

Basic Setup & Prerequisites

- Python: Version 3.8 or higher.

- Virtual Environment: Recommended for dependency isolation.

- Prefect: Install via pip.

- Optional: Prefect Cloud account or self-hosted Orion server for advanced features.

Hands-on: Step-by-Step Beginner-Friendly Setup Guide

- Create a Virtual Environment:

python -m venv prefect-env

source prefect-env/bin/activate # On Windows: prefect-env\Scripts\activate2. Install Prefect:

pip install prefect3. Verify Installation:

prefect version4. Start the Prefect Orion Server (Local):

prefect server startAccess the UI at http://localhost:4200.

5. Write a Simple Flow:

Save as hello_prefect.py:

from prefect import task, flow

@task

def say_hello():

print("Hello, Prefect!")

@flow

def hello_flow():

say_hello()

if __name__ == "__main__":

hello_flow()6. Run the Flow:

python hello_prefect.pyOutput includes logs like:

11:00:00.123 | INFO | prefect.engine - Created flow run 'happy-owl' for flow 'hello-flow'

Hello, Prefect!7. Connect to Prefect Cloud (Optional):

- Sign up at

https://app.prefect.cloud. - Login via CLI:

prefect cloud login -k <your-api-key>8. Deploy the flow:

prefect deployment build hello_prefect.py:hello_flow -n hello-deployment -q default

prefect deployment apply hello-deployment.yamlReal-World Use Cases

- ETL Pipeline for Retail Analytics:

- Scenario: A retail company extracts sales data from an API, transforms it to calculate daily revenue, and loads it into a Snowflake data warehouse.

- Implementation: Use

@taskfor API extraction, transformation (e.g., aggregating sales), and loading to Snowflake. Schedule daily runs via a deployment. - Code Snippet:

from prefect import task, flow

import requests

@task(retries=2)

def extract_sales():

response = requests.get("https://api.retail.com/sales")

return response.json()

@task

def transform_sales(data):

return [{"date": item["date"], "revenue": item["amount"]} for item in data]

@task

def load_to_snowflake(data):

# Simplified example

print(f"Loading to Snowflake: {data[:5]}")

@flow

def retail_etl():

sales = extract_sales()

transformed = transform_sales(sales)

load_to_snowflake(transformed)

if __name__ == "__main__":

retail_etl()2. Machine Learning Model Training:

- Scenario: A fintech company trains a fraud detection model daily, using Prefect to orchestrate data preprocessing, model training, and validation.

- Implementation: Tasks handle data extraction from S3, preprocessing with pandas, and model training with scikit-learn. Deployments trigger runs based on new data events.

3. Data Lake Management in Healthcare:

- Scenario: A healthcare provider manages a data lake, orchestrating data ingestion from IoT devices, quality checks, and storage in AWS S3.

- Implementation: Prefect flows validate data formats, apply quality rules, and store results in S3, with automations alerting teams on failures.

4. Real-Time Data Integration for E-Commerce:

- Scenario: An e-commerce platform syncs inventory data across multiple systems in real-time.

- Implementation: Event-driven flows trigger on inventory updates, coordinating tasks to update databases and notify downstream systems.

Benefits & Limitations

Key Advantages

- Pythonic Workflow: Write workflows in native Python, leveraging existing tools and libraries.

- Robust Error Handling: Built-in retries, timeouts, and state tracking ensure reliability.

- Scalability: Supports local, cloud, or hybrid execution environments.

- Observability: Real-time UI and logging simplify monitoring.

- Community and Ecosystem: Nearly 30,000 engineers in the Prefect community, with integrations for AWS, Snowflake, and more.

Common Challenges or Limitations

- Learning Curve for Advanced Features: Dynamic workflows and automations require understanding Prefect’s internals.

- Resource Overhead: Local Orion server can be resource-intensive for large-scale workflows.

- Dependency Management: Requires careful handling to avoid issues like missing imports or hardcoded values.

- Prefect Cloud Costs: While open-source is free, advanced features in Prefect Cloud may incur costs for enterprise use.

Best Practices & Recommendations

- Modularize Tasks: Break workflows into reusable tasks for maintainability.

- Parameterize Flows: Use parameters to make flows flexible and reusable.

- Enable Retries and Timeouts: Configure

@task(retries=3, timeout_seconds=300)to handle transient failures. - Monitor via UI: Regularly check the Prefect UI for run statuses and logs.

- Secure Credentials: Store sensitive data in Prefect Blocks, not hardcoded in code.

- Test Locally: Validate flows locally before deploying to production.

- Compliance Alignment: Use Prefect Cloud’s RBAC and SCIM for access control in regulated industries.

- Automations: Set up notifications for failures or delays using Prefect’s automation features.

Comparison with Alternatives

| Feature | Prefect | Apache Airflow | Dagster | Luigi |

|---|---|---|---|---|

| Language | Python-native | Python with DSL | Python-native | Python with some boilerplate |

| Ease of Use | High (no DSL, pure Python) | Moderate (requires learning DAGs) | High (Pythonic) | Moderate (requires class-based setup) |

| Dynamic Workflows | Yes (no rigid DAGs) | Limited (DAG-based) | Yes (dynamic graphs) | Limited (static dependencies) |

| UI & Monitoring | Modern, real-time UI | Basic UI, needs customization | Modern UI | Minimal UI |

| Scalability | Local, cloud, Kubernetes | Cloud, Kubernetes | Cloud, Kubernetes | Limited scalability |

| Error Handling | Built-in retries, state tracking | Manual configuration | Built-in retries | Basic retry support |

| Community | Growing (~30,000 engineers) | Large, established | Growing | Smaller, less active |

When to Choose Prefect

- Choose Prefect for Python-centric teams needing dynamic workflows, modern UI, and easy integration with cloud tools.

- Choose Airflow for legacy systems or when extensive community plugins are needed.

- Choose Dagster for data-focused pipelines with strong type checking.

- Choose Luigi for simple, small-scale pipelines with minimal dependencies.

Conclusion

Prefect is a powerful, Python-native tool that simplifies DataOps by providing robust orchestration, observability, and scalability. Its ability to handle dynamic workflows and integrate with modern data stacks makes it ideal for data engineers building resilient pipelines. While it has a learning curve for advanced features, its benefits outweigh limitations for most use cases.

Future Trends:

- Increased adoption of event-driven workflows with Prefect 3.0’s automation features.

- Growing integration with AI/ML pipelines for automated model training.

- Enhanced Prefect Cloud features for enterprise compliance and scalability.