Introduction & Overview

Agile Data is a methodology that applies Agile principles to data management, emphasizing iterative development, collaboration, and adaptability to deliver high-quality data products efficiently. In the context of DataOps, Agile Data serves as a foundational approach to streamline data workflows, break down silos, and accelerate data-driven decision-making. This tutorial provides a detailed exploration of Agile Data within DataOps, covering its core concepts, architecture, setup, use cases, benefits, limitations, best practices, and comparisons with alternatives.

What is Agile Data?

Agile Data refers to the application of Agile methodologies, originally developed for software engineering, to data management and analytics. It focuses on iterative, collaborative, and incremental approaches to designing, developing, and managing data pipelines and products. Agile Data prioritizes delivering small, functional increments of data solutions, enabling rapid feedback and continuous improvement.

- Key Characteristics:

- Iterative development of data pipelines and analytics.

- Cross-functional collaboration between data engineers, scientists, analysts, and business stakeholders.

- Emphasis on delivering business value through data quickly and reliably.

History or Background

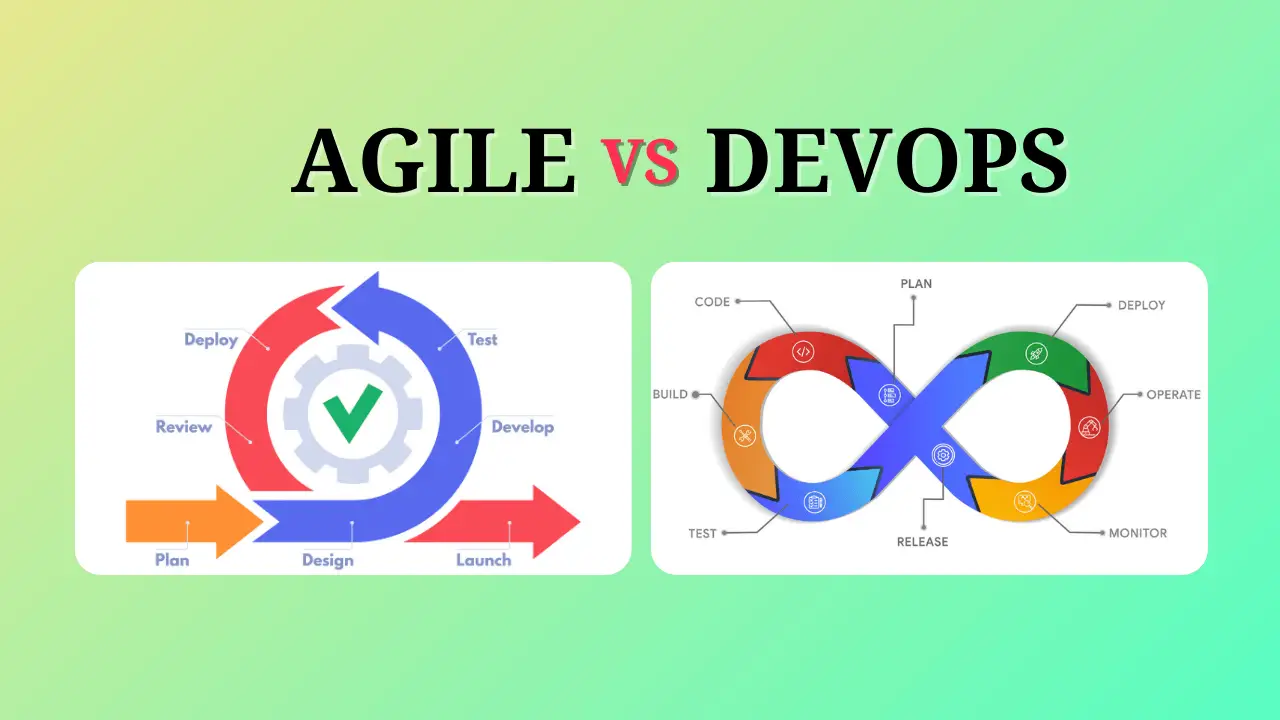

Agile Data emerged as organizations faced challenges with traditional, waterfall-based data management approaches, which were often slow and siloed. The concept draws inspiration from the Agile Manifesto (2001), which revolutionized software development, and DevOps, which bridged development and operations. In the early 2010s, as big data and analytics grew, thought leaders like Andy Palmer of Tamr and Steph Locke popularized DataOps, integrating Agile principles into data management. Agile Data became a core component of DataOps, addressing the need for faster, more collaborative data workflows in modern enterprises.

Why is it Relevant in DataOps?

DataOps is a methodology that combines Agile, DevOps, and lean manufacturing principles to optimize the data lifecycle, from ingestion to analytics. Agile Data is critical to DataOps because it:

- Enhances Agility: Enables rapid iteration and adaptation to changing business needs.

- Fosters Collaboration: Breaks down silos between data producers and consumers, aligning technical and business teams.

- Improves Quality: Incorporates continuous feedback and testing to ensure high-quality, reliable data.

- Supports Automation: Complements DataOps’ focus on automating data pipelines for efficiency.

Agile Data aligns with DataOps’ goal of delivering business-ready data quickly, making it a cornerstone for organizations aiming to leverage data as a strategic asset.

Core Concepts & Terminology

Key Terms and Definitions

- Agile Data: An approach to data management that applies Agile principles like iterative development, collaboration, and continuous feedback to data workflows.

- DataOps: A collaborative data management practice that integrates Agile, DevOps, and lean methodologies to improve data quality, speed, and accessibility.

- Data Pipeline: A series of processes for extracting, transforming, and loading (ETL) data from sources to destinations for analysis.

- Continuous Integration/Continuous Deployment (CI/CD): Practices borrowed from DevOps to automate testing and deployment of data pipelines.

- Data as a Product: A DataOps concept treating data outputs as products with defined owners, quality standards, and user-focused delivery.

- Data Mesh: A decentralized data architecture that aligns with Agile Data by treating data as products managed by domain-specific teams.

| Term | Definition |

|---|---|

| Data Sprint | A short, time-boxed period for delivering specific data features. |

| Data User Story | A brief requirement focused on the value to end-users of data. |

| Data Backlog | A prioritized list of pending data tasks or improvements. |

| Data Pipeline | Automated sequence of processes for ingesting, transforming, and delivering data. |

| Continuous Data Integration | Frequent merging and testing of data workflows to ensure quality. |

How it Fits into the DataOps Lifecycle

The DataOps lifecycle includes stages like data ingestion, transformation, storage, analytics, and delivery. Agile Data integrates into this lifecycle by:

- Iterative Development: Breaking data pipeline development into small, manageable sprints (e.g., 2–4 weeks) to deliver incremental improvements.

- Continuous Feedback: Incorporating stakeholder feedback to refine data products, ensuring alignment with business goals.

- Collaboration: Enabling cross-functional teams to work together on data pipelines, reducing silos and misalignments.

- Automation: Supporting automated testing, monitoring, and deployment to maintain agility and reliability.

Agile Data ensures that each stage of the DataOps lifecycle is responsive, collaborative, and focused on delivering value.

Architecture & How It Works

Components and Internal Workflow

Agile Data within DataOps relies on several components:

- Data Sources: Databases, APIs, sensors, or data lakes where raw data originates.

- Data Pipelines: ETL/ELT processes that transform and move data to storage or analytics platforms.

- Data Storage: Data warehouses (e.g., Snowflake, Databricks), data lakes, or databases (e.g., PostgreSQL, MongoDB).

- Data Consumers: Business intelligence (BI) tools, data scientists, or analysts who use processed data for insights.

- Automation Tools: Tools like Apache Airflow, dbt, or Informatica for orchestrating and automating workflows.

- Monitoring and Governance: Tools like IBM Databand or Acceldata for real-time pipeline monitoring and data quality checks.

Workflow:

- Ingestion: Data is collected from various sources.

- Transformation: Data is cleaned, transformed, and enriched in iterative sprints.

- Storage: Processed data is stored in scalable systems like data lakes or warehouses.

- Analysis: Data is analyzed using BI tools or ML models.

- Delivery: Insights are delivered to stakeholders, with feedback loops driving further iterations.

Architecture Diagram Description

Imagine a flowchart with the following structure:

- Left: Data Sources (e.g., APIs, databases, IoT devices) feed into a Data Ingestion Layer.

- Center: The Data Pipeline Layer (ETL/ELT processes) uses tools like Apache Airflow or dbt, with CI/CD pipelines for automation.

- Right: Data Storage (data lake/warehouse) connects to Data Consumers (BI tools, ML models).

- Feedback Loop: A continuous feedback loop from consumers back to the pipeline layer, with monitoring tools (e.g., Databand) ensuring quality.

Arrows indicate data flow, with governance and monitoring layers overseeing the entire process.

[Data Sources]

↓

[Ingestion Layer: Kafka/Fivetran]

↓

[Processing Layer: Spark/dbt]

↓

[Storage: Snowflake/BigQuery]

↓

[Analytics & ML: Tableau/Power BI/ML Models]

↓

[Monitoring: Great Expectations/Soda]

↺ Feedback to Dev & Ops Teams

Integration Points with CI/CD or Cloud Tools

Agile Data integrates with CI/CD and cloud tools to enhance automation and scalability:

- CI/CD: Tools like Jenkins or GitHub Actions automate testing and deployment of data pipelines, ensuring rapid iterations.

- Cloud Tools: Platforms like AWS, Azure, or Google Cloud provide scalable storage (e.g., S3, Azure Data Lake) and compute resources (e.g., AWS Lambda, Databricks).

- Version Control: Git is used for version control of data models and pipeline code, enabling collaboration and rollback capabilities.

- Orchestration: Tools like Apache Airflow or Kubernetes manage pipeline workflows in cloud-native environments.

Installation & Getting Started

Basic Setup or Prerequisites

To implement Agile Data in a DataOps environment, you’ll need:

- Data Platform: A data warehouse (e.g., Snowflake) or data lake (e.g., AWS S3).

- Orchestration Tool: Apache Airflow or dbt for pipeline automation.

- Version Control: Git for managing pipeline code.

- Monitoring Tool: IBM Databand or Acceldata for pipeline observability.

- Cloud Environment: AWS, Azure, or GCP for scalable infrastructure.

- Skills: Basic knowledge of SQL, Python, and Agile methodologies.

Hands-on: Step-by-Step Beginner-Friendly Setup Guide

This guide sets up a simple Agile Data pipeline using Apache Airflow on a local machine.

- Install Python:

- Ensure Python 3.8+ is installed.

- Run:

python --versionto verify.

- Install Apache Airflow:

pip install apache-airflow

airflow db init3. Set Up Airflow Home:

- Create a directory (e.g.,

~/airflow). - Set environment variable:

export AIRFLOW_HOME=~/airflow4. Create a Simple DAG (Directed Acyclic Graph):

- Create a file

~/airflow/dags/simple_pipeline.py:

from airflow import DAG

from airflow.operators.python import PythonOperator

from datetime import datetime

def extract_data():

print("Extracting data...")

def transform_data():

print("Transforming data...")

def load_data():

print("Loading data...")

with DAG('simple_data_pipeline', start_date=datetime(2025, 1, 1), schedule_interval='@daily') as dag:

extract = PythonOperator(task_id='extract', python_callable=extract_data)

transform = PythonOperator(task_id='transform', python_callable=transform_data)

load = PythonOperator(task_id='load', python_callable=load_data)

extract >> transform >> load5. Start Airflow:

airflow webserver -p 8080 airflow scheduler6. Access Airflow UI:

- Open

http://localhost:8080in a browser. - Enable the

simple_data_pipelineDAG and trigger it.

7. Monitor Pipeline:

- Use Airflow’s UI to monitor task execution and logs.

This setup demonstrates an iterative pipeline development process, aligning with Agile Data principles.

Real-World Use Cases

Use Case 1: Retail – Customer Behavior Analytics

A retail company uses Agile Data to analyze customer purchase patterns:

- Scenario: The company collects data from POS systems, e-commerce platforms, and loyalty programs.

- Implementation: Data engineers build iterative ETL pipelines using dbt and Snowflake, with weekly sprints to refine transformations based on marketing team feedback.

- Outcome: Rapid insights into customer preferences, enabling targeted promotions and a 15% increase in sales.

Use Case 2: Healthcare – Patient Data Integration

A hospital implements Agile Data to integrate patient data from EHR systems:

- Scenario: Data from multiple EHRs needs to be unified for analytics.

- Implementation: Cross-functional teams use Apache Airflow and Azure Data Factory to create pipelines, with automated quality checks and stakeholder feedback loops.

- Outcome: Improved patient care through timely, accurate data insights.

Use Case 3: Finance – Fraud Detection

A bank leverages Agile Data for real-time fraud detection:

- Scenario: Transaction data must be processed in real-time to detect anomalies.

- Implementation: Data scientists and engineers collaborate on a streaming pipeline using Kafka and Databricks, with CI/CD for rapid model updates.

- Outcome: Reduced fraud incidents by 20% through faster detection.

Use Case 4: Manufacturing – Supply Chain Optimization

A manufacturer uses Agile Data to optimize supply chain logistics:

- Scenario: Data from IoT sensors and ERP systems is analyzed to predict inventory needs.

- Implementation: Iterative pipelines built with Apache Spark and AWS Glue, with continuous monitoring using Acceldata.

- Outcome: 10% reduction in inventory costs due to precise demand forecasting.

Benefits & Limitations

Key Advantages

- Faster Time-to-Insights: Iterative development reduces delivery time for data products.

- Improved Data Quality: Continuous testing and feedback ensure reliable data.

- Enhanced Collaboration: Cross-functional teams align technical and business goals.

- Scalability: Agile Data supports scaling pipelines for large data volumes.

Common Challenges or Limitations

- Cultural Resistance: Teams accustomed to traditional methods may resist Agile practices.

- Skill Gaps: Requires expertise in Agile methodologies and data tools.

- Tooling Complexity: Integrating multiple tools (e.g., Airflow, dbt) can be challenging.

- Data Governance: Ensuring compliance in iterative processes can be complex.

Best Practices & Recommendations

Security Tips

- Access Controls: Implement role-based access control (RBAC) for data pipelines.

- Data Masking: Use tools like Delphix to mask sensitive data during testing.

- Encryption: Ensure data is encrypted in transit and at rest.

Performance

- Optimize Pipelines: Use partitioning and indexing in data warehouses to improve query performance.

- Automate Monitoring: Deploy tools like IBM Databand for real-time pipeline observability.

Maintenance

- Version Control: Use Git for pipeline code and data models to track changes.

- Documentation: Maintain clear documentation for pipeline workflows and iterations.

Compliance Alignment

- Data Governance: Implement automated quality checks to ensure compliance with regulations like GDPR or HIPAA.

- Audit Trails: Use tools like Collibra to maintain data lineage and auditability.

Automation Ideas

- CI/CD Pipelines: Automate testing and deployment with Jenkins or GitHub Actions.

- Quality Checks: Use dbt tests to validate data integrity automatically.

Comparison with Alternatives

| Aspect | Agile Data | Traditional Data Management | Data Mesh |

|---|---|---|---|

| Approach | Iterative, collaborative, automated | Sequential, siloed, manual | Decentralized, domain-driven |

| Speed | Fast (sprints) | Slow (waterfall) | Moderate (domain-specific) |

| Collaboration | High (cross-functional teams) | Low (siloed teams) | High (domain teams) |

| Scalability | High (cloud-native, CI/CD) | Limited (monolithic systems) | High (distributed architecture) |

| Use Case | Rapid analytics, real-time insights | Static reporting, batch processing | Domain-specific data products |

When to Choose Agile Data

- Choose Agile Data: When rapid iteration, collaboration, and automation are critical, such as in dynamic industries like retail or finance.

- Choose Alternatives: Use traditional methods for stable, low-change environments or Data Mesh for highly decentralized organizations.

Conclusion

Agile Data is a transformative approach within DataOps, enabling organizations to deliver high-quality data products quickly and collaboratively. By embracing iterative development, automation, and cross-functional teamwork, Agile Data addresses the challenges of modern data management. Its integration with CI/CD and cloud tools makes it scalable and adaptable, while its focus on continuous improvement ensures long-term value.

Future Trends

- AI/ML Integration: Automating data quality and pipeline optimization with AI.

- Real-Time Analytics: Growing demand for streaming data pipelines.

- DataOps-as-a-Service: Cloud-based platforms simplifying DataOps adoption.

Next Steps

- Experiment with the provided Airflow setup to build your first Agile Data pipeline.

- Explore advanced tools like dbt, Snowflake, or Databricks for production environments.

- Engage with the DataOps community for best practices and updates.

Resources

- Official Docs: Apache Airflow, dbt

- Communities: DataOps Community, [Slack channels for Airflow and dbt]