Introduction & Overview

Data governance is a critical discipline for organizations aiming to manage their data as a strategic asset, ensuring its quality, security, and compliance throughout its lifecycle. In the context of DataOps, data governance integrates with agile methodologies, automation, and collaborative practices to streamline data workflows and enhance business value. This tutorial provides an in-depth exploration of data governance within DataOps, covering its principles, architecture, setup, real-world applications, benefits, limitations, and best practices. Designed for technical readers, including data engineers, data scientists, and IT professionals, this guide offers practical insights and hands-on instructions to implement effective data governance in a DataOps environment.

What is Data Governance?

Definition

Data governance refers to the policies, processes, roles, and technologies that ensure the effective management, quality, security, and compliance of an organization’s data assets throughout their lifecycle. It establishes a framework for data accessibility, reliability, and protection, aligning data management with business objectives.

History or Background

Data governance emerged as a formal discipline in the early 2000s, driven by increasing data volumes, regulatory requirements (e.g., Sarbanes-Oxley, GDPR), and the need for data-driven decision-making. Initially focused on compliance and risk mitigation, it has evolved to support innovation, analytics, and AI initiatives. The rise of DataOps, inspired by DevOps, has further integrated data governance into agile, automated data pipelines, emphasizing collaboration and continuous improvement.

| Year | Milestone |

|---|---|

| 1990s | Rise of data warehousing led to formal practices for managing enterprise data. |

| 2000s | Regulatory compliance (e.g., SOX, HIPAA, GDPR) demanded structured data governance. |

| 2010s | Big Data and real-time analytics required automation-friendly governance frameworks. |

| 2020s | DataOps emerged, necessitating dynamic, CI/CD-integrated governance mechanisms. |

Why is it Relevant in DataOps?

DataOps combines agile methodologies, DevOps practices, and data management to accelerate the delivery of high-quality, trusted data. Data governance is integral to DataOps because it:

- Ensures Data Quality: Maintains accuracy, completeness, and consistency for reliable analytics.

- Supports Compliance: Aligns with regulations like GDPR, HIPAA, and CCPA, reducing legal risks.

- Enables Collaboration: Provides clear roles and policies, fostering cross-functional teamwork.

- Facilitates Automation: Embeds governance rules into automated data pipelines, reducing manual oversight.

- Drives Business Value: Aligns data strategies with business goals, enhancing decision-making.

Core Concepts & Terminology

Key Terms and Definitions

- Data Governance: The framework of policies, processes, and roles for managing data quality, security, and compliance.

- DataOps: A methodology that integrates agile practices, automation, and collaboration to streamline data workflows.

- Data Steward: An individual responsible for managing data quality, compliance, and usage within a domain.

- Data Catalog: A centralized repository of metadata describing data assets, aiding discovery and governance.

- Data Lineage: The tracking of data’s origin, transformations, and movement through pipelines.

- Metadata Management: The process of collecting, storing, and utilizing data about data to enhance governance.

- Data Quality: Attributes like accuracy, completeness, and consistency that ensure data reliability.

- DataGovOps: An extension of DataOps that automates governance processes within data workflows.

| Term | Definition |

|---|---|

| Data Steward | Person responsible for data quality, metadata, and governance. |

| Data Lineage | Visual representation of how data flows through systems. |

| Data Catalog | Inventory of data assets with metadata. |

| Data Policy | Formal rules defining data access, use, and retention. |

| Data Quality | Degree to which data is accurate, complete, and reliable. |

How it Fits into the DataOps Lifecycle

The DataOps lifecycle includes stages like data ingestion, transformation, analysis, and delivery. Data governance integrates at each stage:

- Ingestion: Defines policies for data collection and validation to ensure quality at the source.

- Transformation: Enforces rules for data cleansing, standardization, and enrichment.

- Analysis: Ensures data used in analytics is accurate, secure, and compliant.

- Delivery: Manages access controls and monitors usage to protect sensitive data.

Governance in DataOps is not a standalone process but is embedded in automated pipelines, ensuring continuous compliance and quality without hindering agility.

| DataOps Stage | Governance Role |

|---|---|

| Ingestion | Define rules for sources, access, and encryption |

| Transformation | Apply validation, data masking, audit logging |

| CI/CD Deployment | Enforce schema/version control and automated tests |

| Monitoring | Generate lineage reports, anomaly detection alerts |

Architecture & How It Works

Components

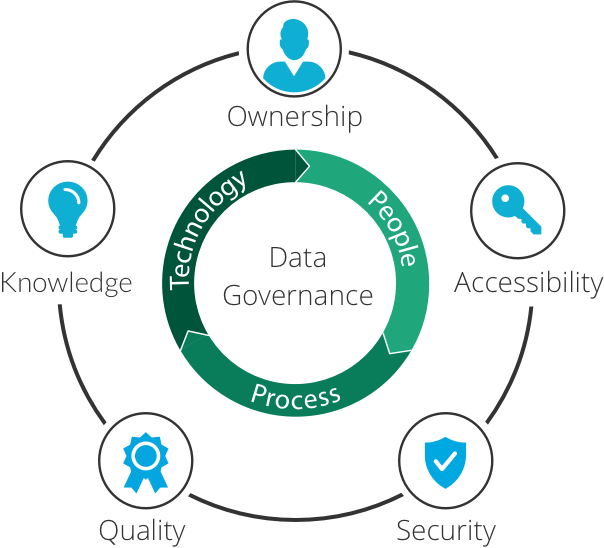

A data governance framework in DataOps comprises:

- People: Data stewards, data owners, governance managers, and cross-functional teams.

- Policies: Guidelines for data quality, security, access, and compliance.

- Processes: Workflows for data validation, monitoring, and issue resolution.

- Technology: Tools like data catalogs (e.g., Alation, Collibra), metadata management systems, and monitoring dashboards.

Internal Workflow

- Policy Definition: Data stewards define rules for quality, security, and compliance (e.g., data retention policies, access controls).

- Metadata Collection: Automated tools catalog data assets, capturing metadata like source, lineage, and sensitivity.

- Data Quality Checks: Automated validation ensures data meets quality standards (e.g., completeness, accuracy).

- Access Management: Role-based access controls (RBAC) restrict data access to authorized users.

- Monitoring and Auditing: Continuous monitoring tracks compliance and data usage, with alerts for anomalies.

- Iterative Improvement: Feedback loops refine policies and processes based on audit results.

Architecture Diagram Description

Imagine a layered architecture:

- Top Layer (Governance Policies): Defines rules for quality, security, and compliance.

- Middle Layer (DataOps Pipeline): Includes ingestion, transformation, and delivery stages, with embedded governance checks.

- Bottom Layer (Technology Stack): Comprises data catalogs, lineage tools, and CI/CD systems (e.g., Apache Airflow, Jenkins).

- Connections: Bidirectional arrows link policies to pipelines, ensuring governance rules are applied at each stage, with monitoring tools feeding data back to refine policies.

[Data Sources]

↓

[Ingestion Layer] → [Metadata Engine] → [Data Catalog]

↓ ↓ ↓

[Transformation/ETL] → [Policy Engine] → [Access Control]

↓

[Storage/Analytics] → [Lineage Tracking] → [Audit Logs]

Integration Points with CI/CD or Cloud Tools

- CI/CD Integration: Governance rules are codified into CI/CD pipelines (e.g., using Jenkins or GitLab CI) to automate quality checks and compliance validation during data pipeline updates.

- Cloud Tools: Integrates with cloud platforms like AWS (e.g., AWS Glue for metadata, IAM for access control), Azure (e.g., Azure Purview), or GCP (e.g., Data Catalog).

- Orchestration Tools: Tools like Apache Airflow embed governance checks into data workflows, ensuring compliance during orchestration.

| Tool | Integration Use |

|---|---|

| GitHub Actions / GitLab CI | Automate governance validation checks in pipelines |

| Apache Atlas | For lineage + metadata tracking |

| AWS Lake Formation | Central governance in cloud data lakes |

| Azure Purview | Cloud-native data governance and discovery |

| Great Expectations | Embedded data quality checks |

| Airflow | Orchestrate governed ETL pipelines |

Installation & Getting Started

Basic Setup or Prerequisites

To implement data governance in a DataOps environment, you need:

- Data Catalog Tool: E.g., Collibra, Alation, or open-source options like Apache Atlas.

- Data Pipeline Tool: E.g., Apache Airflow, DBT, or Informatica.

- Version Control: Git for managing pipeline code and governance policies.

- Cloud Platform: AWS, Azure, or GCP for scalable storage and compute.

- Access Control System: RBAC or identity management (e.g., Okta, AWS IAM).

- Team Roles: Data stewards, engineers, and governance managers.

Hands-on: Step-by-Step Beginner-Friendly Setup Guide

This guide sets up a basic data governance framework using Apache Atlas and Apache Airflow on a local machine or cloud instance.

- Install Apache Atlas:

- Download Atlas from

https://atlas.apache.org.

- Download Atlas from

- Prerequisites: Java 8, Maven, and a database (e.g., H2 or MySQL).

wget https://archive.apache.org/dist/atlas/2.2.0/apache-atlas-2.2.0-bin.tar.gz

tar -xzf apache-atlas-2.2.0-bin.tar.gz

cd apache-atlas-2.2.0

mvn clean install- Start Atlas server

bin/atlas_start.py2. Set Up Apache Airflow:

- Install Airflow:

pip install apache-airflow airflow db init- Start Airflow webserver and scheduler:

airflow webserver -p 8080

airflow scheduler3. Define Governance Policies:

In Atlas, create classifications (e.g., SENSITIVE, PUBLIC) to tag data assets.

Define policies for data quality (e.g., “All customer data must have non-null IDs”).

4. Integrate Governance with Airflow:

- Create a DAG to validate data quality:

from airflow import DAG

from airflow.operators.python import PythonOperator

from datetime import datetime

import requests

def check_data_quality():

# Example: Check for null values in a dataset

response = requests.get("http://atlas:21000/api/atlas/v2/entity/guid/<dataset_guid>")

if response.json().get("attributes").get("is_complete"):

print("Data quality check passed")

else:

raise ValueError("Data quality check failed")

with DAG('data_governance_dag', start_date=datetime(2025, 1, 1), schedule_interval='@daily') as dag:

quality_check = PythonOperator(

task_id='check_data_quality',

python_callable=check_data_quality

)5. Monitor and Audit:

Use Atlas to track data lineage and compliance.

Set up alerts in Airflow for governance violations.

Real-World Use Cases

- Financial Services: Regulatory Compliance:

- A bank uses DataOps to automate data pipelines for real-time transaction monitoring. Data governance ensures compliance with Anti-Money Laundering (AML) regulations by enforcing data quality checks and access controls, reducing compliance risks.

- Healthcare: Patient Data Protection:

- A hospital implements DataOps to process patient data for analytics. Governance policies enforce HIPAA compliance by encrypting sensitive data and restricting access to authorized personnel, ensuring patient privacy.

- Retail: Personalized Marketing:

- A retailer uses DataOps to analyze customer data for targeted campaigns. Governance ensures data quality (e.g., accurate customer profiles) and compliance with GDPR, enabling personalized marketing without legal risks.

- Manufacturing: Predictive Maintenance:

- A manufacturer uses DataOps to process IoT sensor data for predictive maintenance. Governance ensures data integrity and lineage, enabling accurate predictions and reducing downtime.

Benefits & Limitations

Key Advantages

- Improved Data Quality: Ensures accurate, complete, and consistent data for analytics.

- Regulatory Compliance: Aligns with GDPR, HIPAA, and CCPA, reducing legal risks.

- Enhanced Collaboration: Clear roles and policies foster cross-team alignment.

- Automation: Embeds governance into DataOps pipelines, reducing manual effort.

- Business Value: Aligns data with strategic goals, driving better decisions.

Common Challenges or Limitations

- Complexity: Setting up governance frameworks can be time-consuming and resource-intensive.

- Resistance to Change: Teams may resist new policies or processes, slowing adoption.

- Scalability: Governance frameworks may struggle with rapidly growing data volumes.

- Tool Integration: Ensuring compatibility across diverse tools can be challenging.

Best Practices & Recommendations

- Security Tips:

- Implement RBAC to restrict data access.

- Use encryption for sensitive data at rest and in transit.

- Performance:

- Automate data quality checks to reduce latency in pipelines.

- Use scalable cloud tools for governance (e.g., Azure Purview).

- Maintenance:

- Regularly update policies based on audit feedback.

- Monitor data lineage to trace and resolve issues quickly.

- Compliance Alignment:

- Map governance policies to specific regulations (e.g., GDPR’s data minimization).

- Use automated compliance checks in CI/CD pipelines.

- Automation Ideas:

- Integrate governance with CI/CD using tools like Jenkins or GitLab.

- Use data catalogs to automate metadata management and discovery.

Comparison with Alternatives

| Aspect | Data Governance in DataOps | Traditional Data Governance | DataGovOps |

|---|---|---|---|

| Approach | Integrated with agile DataOps pipelines | Manual, policy-heavy processes | Automated governance within DataOps |

| Automation | High (embedded in CI/CD) | Low (manual checklists) | High (governance-as-code) |

| Speed | Fast, iterative | Slow, bureaucratic | Fast, continuous |

| Collaboration | Cross-functional teams | Siloed roles | Cross-functional with automation |

| Use Case | Agile analytics, real-time insights | Compliance-focused, static data | Agile governance with automation |

When to Choose Data Governance in DataOps:

- When agility and automation are critical for data pipelines.

- For organizations with complex, high-volume data needing real-time governance.

- When compliance and collaboration are equally important.

Conclusion

Data governance in DataOps is a powerful approach to managing data as a strategic asset, ensuring quality, compliance, and collaboration while maintaining agility. By embedding governance into automated pipelines, organizations can unlock the full potential of their data, driving innovation and competitive advantage. As data volumes grow and regulations evolve, trends like AI-driven governance and governance-as-code will shape the future. Start small, align governance with business goals, and leverage tools like Apache Atlas or Collibra to build a robust framework.