📌 Introduction & Overview

What is a Data Warehouse?

A Data Warehouse (DW) is a centralized repository designed to store, manage, and analyze vast amounts of structured data from multiple sources. It is optimized for querying and reporting, rather than transaction processing. Unlike traditional databases used in OLTP (Online Transaction Processing), data warehouses are designed for OLAP (Online Analytical Processing).

In a DevSecOps context, a data warehouse serves as a critical backend for gathering telemetry, logs, metrics, and security analytics from CI/CD pipelines, cloud infrastructure, and runtime environments—supporting security compliance, audit readiness, and operational intelligence.

History and Background

- 1980s: Conceptual foundations laid by IBM’s “Business Data Warehouse.”

- 1990s: Ralph Kimball and Bill Inmon developed competing methodologies (Dimensional vs. Corporate Information Factory).

- 2000s–2010s: Rise of cloud-based solutions like Amazon Redshift, Google BigQuery, Snowflake.

- Now: Deep integration with DevOps, DevSecOps, AI/ML pipelines, and compliance systems.

Why It’s Relevant in DevSecOps

- Security Telemetry Aggregation: Stores audit logs, IAM actions, secrets scanning, vulnerability reports.

- Compliance Audits: Enables traceability of controls, evidence collection for SOC 2, HIPAA, etc.

- Operational Intelligence: Facilitates long-term trend analysis on deployment frequency, incident response, and anomaly detection.

- Infrastructure & Pipeline Observability: Bridges the gap between development velocity and security control monitoring.

🔍 Core Concepts & Terminology

| Term | Definition |

|---|---|

| ETL/ELT | Extract, Transform, Load / Extract, Load, Transform – Data ingestion flows |

| Star Schema | Dimensional modeling for analytical queries |

| Fact Table | Quantitative data for analysis (e.g., # of failed builds) |

| Dimension Table | Contextual data (e.g., date, team, pipeline name) |

| Time-series Data | Chronological data used for trend/security analysis |

| Data Mart | Subset of a data warehouse focused on a particular function or team |

| OLAP Cube | Multi-dimensional data model used for fast analytical queries |

How It Fits into the DevSecOps Lifecycle

| Stage | Usage of Data Warehouse |

|---|---|

| Plan | Analyze past vulnerabilities, security debt, risk scores |

| Develop | Monitor secrets leaks, coding anomalies, PR stats |

| Build | Track failed builds due to security policy violations |

| Test | Analyze trends in SAST/DAST test failures |

| Release | Store and query release audit trails |

| Deploy | Aggregate deployment patterns, track misconfigurations |

| Operate | Store logs, metrics, alerts for security monitoring |

| Monitor | Long-term storage of observability and incident response data |

🧰 Architecture & How It Works

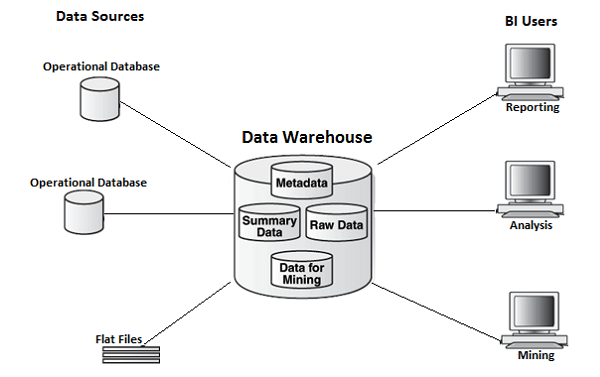

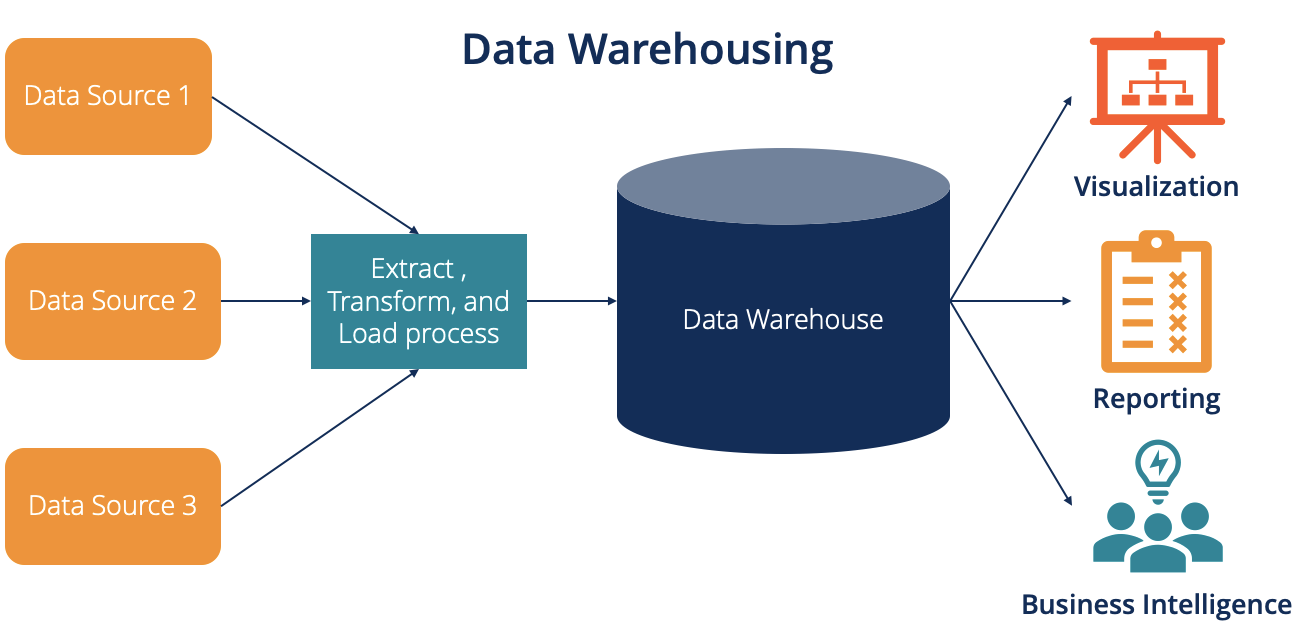

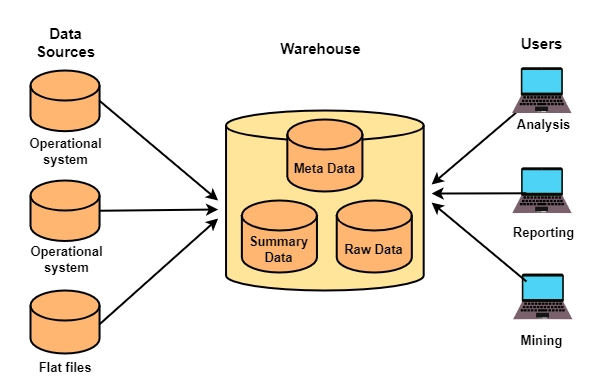

Components

- Data Sources: CI/CD pipelines, cloud logs, SAST/DAST tools, secrets scanners

- ETL/ELT Tools: Apache Airflow, dbt, AWS Glue

- Data Warehouse Engine: Amazon Redshift, Google BigQuery, Snowflake

- BI Layer / Query Layer: Looker, Metabase, Grafana, Apache Superset

- Access Control & Auditing: Role-based access, encryption, and logging

Internal Workflow

- Ingestion: Pull data from source systems via APIs or log shippers

- Transformation: Clean and normalize logs (e.g., unify timestamp formats)

- Load: Push into warehouse as structured tables

- Query & Analysis: Use SQL or BI tools to derive insights

- Alerting: Use thresholds and rules to trigger alerts or dashboards

Architecture Diagram (Descriptive)

+--------------------+ +---------------+ +---------------------+

| CI/CD Tools | --> | ETL/ELT Tools | --> | Data Warehouse |

| (Jenkins, GitLab) | | (Airflow, dbt)| | (BigQuery, Redshift)|

+--------------------+ +---------------+ +---------------------+

|

v

+----------------+

| BI/Dashboards |

| (Grafana, etc) |

+----------------+

Integration Points with CI/CD & Cloud

- GitHub Actions: Output logs piped to AWS Athena via Firehose

- Kubernetes: Logs ingested using Fluent Bit → Snowflake

- Terraform: Cloud infrastructure changes tracked and analyzed for drift

- Falco / Sysdig: Runtime security alerts ETL-ed into a warehouse

- Snyk / Trivy: Vulnerability scan results transformed and ingested

⚙️ Installation & Getting Started

Basic Setup Requirements

- Cloud Provider: AWS / GCP / Azure

- Permissions: IAM roles to access data sources

- ETL Tool: dbt / Airflow / Fivetran

- BI Tool (optional): Metabase or Looker

Hands-on Setup: Google BigQuery + GitHub Actions Logs

- Create BigQuery Dataset

bq mk --dataset my_project:devsecops_logs

- Export GitHub Actions Logs to GCS (Google Cloud Storage)

Use GitHub’s REST API or a webhook to capture run logs. - Ingest Using dbt

# dbt_project.yml

version: 2

name: 'devsecops_logs'

profile: 'bigquery'

models:

logs:

materialized: table

- Schedule Daily Sync with Airflow

from airflow import DAG

from airflow.operators.bash import BashOperator

with DAG('github_log_pipeline', schedule_interval='@daily') as dag:

fetch_logs = BashOperator(

task_id='fetch_logs',

bash_command='python3 fetch_github_logs.py'

)

- Query Example

SELECT actor_login, COUNT(*) AS build_failures

FROM `devsecops_logs.github_actions`

WHERE conclusion = 'failure'

GROUP BY actor_login

ORDER BY build_failures DESC;

🧪 Real-World Use Cases

1. Security Incident Forensics

- Centralize logs from Falco, AWS CloudTrail, and GitHub Audit Logs

- Perform correlation analysis during breach investigations

2. Vulnerability Trend Analysis

- Aggregate results from tools like SonarQube, Snyk, and Trivy

- Track severity levels and trends over time per project/team

3. Compliance Evidence Collection

- Collect and store logs required for PCI-DSS, ISO 27001 audits

- Automate reports showing control coverage and historical evidence

4. Cost and Resource Drift Monitoring

- Ingest Terraform state and actual cloud usage logs

- Identify untagged or out-of-policy resources

✅ Benefits & Limitations

Key Advantages

- 🔍 Unified Security View: Combines disparate logs into one schema

- ⏱️ Time-Series Analytics: Long-term trend analysis for risk profiling

- 📊 Business Intelligence: Empower non-engineers via dashboards

- 🛡️ Immutable Audit Trail: Supports compliance with read-only data

Common Limitations

- 💸 Cost Management: Storage and query pricing can grow quickly

- 🧩 Complexity: Requires knowledge of data modeling and ETL tools

- ⏳ Latency: Not suitable for real-time response actions

- 🔐 Security Risk: If poorly secured, becomes a central breach point

🛠️ Best Practices & Recommendations

🔐 Security Tips

- Use encryption at rest and in transit

- Set fine-grained IAM roles for datasets and query access

- Implement logging and anomaly detection for warehouse queries

🧪 Performance & Maintenance

- Use partitioned tables for time-based queries

- Regularly archive old data or use tiered storage

- Monitor query performance and cost usage

⚖️ Compliance Alignment

- Map warehouse data schemas to compliance control frameworks

- Store audit logs for minimum retention periods

- Use policy-as-code (e.g., OPA, Sentinel) for governance automation

🔁 Automation Ideas

- Auto-trigger compliance report generation

- Automate ETL flows using CI pipelines

- Integrate with Slack/Teams for anomaly alerting

🔄 Comparison with Alternatives

| Feature / Tool | Data Warehouse (BigQuery, Redshift) | Time-series DB (InfluxDB) | ELK Stack |

|---|---|---|---|

| Analytical Queries | ✅ Excellent | ❌ Limited | ✅ Good |

| Log Ingestion | ✅ Yes | ✅ Yes | ✅ Excellent |

| Cost for Storage | ⚠️ Medium to High | ✅ Low | ⚠️ Medium |

| Security Compliance | ✅ Enterprise-grade support | ⚠️ Limited | ⚠️ Requires setup |

| Real-time Alerting | ❌ No | ✅ Yes | ✅ Yes |

| Dashboarding | ✅ Yes (BI tools) | ✅ Yes | ✅ Yes (Kibana) |

When to Choose a Data Warehouse

- You need long-term analytical capabilities

- You require rich integrations with BI tools

- You aim to store structured security or ops telemetry

🧭 Conclusion

In the evolving world of DevSecOps, a Data Warehouse acts as a foundational analytics platform. It enables teams to monitor security, maintain compliance, and improve operational excellence. As organizations mature, integrating warehousing into CI/CD and cloud infrastructure helps align business, security, and engineering goals.