Introduction & Overview

Apache Kafka is a distributed streaming platform that has become a cornerstone in modern DataOps practices. This tutorial provides an in-depth exploration of Kafka, focusing on its role in DataOps, core concepts, architecture, setup, use cases, benefits, limitations, best practices, and comparisons with alternatives. Designed for technical readers, this guide includes practical examples and structured insights to help you leverage Kafka effectively in data-driven workflows.

What is Apache Kafka?

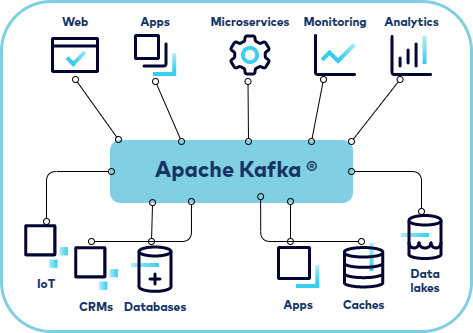

Apache Kafka is an open-source, distributed event-streaming platform designed for high-throughput, fault-tolerant, and scalable data processing. It enables real-time data pipelines and streaming applications by acting as a message broker that handles massive volumes of data across distributed systems.

- Core Functionality: Kafka allows systems to publish, subscribe, store, and process streams of records in real time.

- Key Characteristics: Distributed, scalable, fault-tolerant, and durable.

- Primary Use: Real-time data integration, event-driven architectures, and streaming analytics.

History or Background

Kafka was originally developed at LinkedIn in 2010 to address the need for a unified, high-throughput system to handle large-scale data streams. Open-sourced in 2011 under the Apache Software Foundation, it has since been adopted by thousands of organizations for its robustness and flexibility.

- Creators: Jay Kreps, Neha Narkhede, and Jun Rao.

- Evolution: From a messaging system to a full-fledged streaming platform with features like Kafka Streams and Connect.

- Adoption: Used by companies like Netflix, Uber, and Airbnb for real-time data processing.

Why is it Relevant in DataOps?

DataOps (Data Operations) is a methodology that combines DevOps principles with data management to deliver high-quality data pipelines efficiently. Kafka is integral to DataOps because it:

- Enables Real-Time Data Pipelines: Supports continuous data ingestion and processing.

- Facilitates Collaboration: Bridges data producers and consumers across teams.

- Enhances Automation: Integrates with CI/CD pipelines and orchestration tools.

- Scales Data Workflows: Handles large-scale, distributed data environments critical for modern analytics.

Core Concepts & Terminology

Key Terms and Definitions

- Topic: A category or feed name to which records are published (e.g., “user-clicks”).

- Partition: A topic is divided into partitions for parallel processing and scalability.

- Producer: An application that sends records to Kafka topics.

- Consumer: An application that subscribes to topics to process records.

- Broker: A Kafka server that stores and manages data.

- Consumer Group: A group of consumers that coordinate to process a topic’s partitions.

- Offset: A unique identifier for each record in a partition, enabling sequential processing.

- Kafka Connect: A framework for integrating Kafka with external systems (e.g., databases).

- Kafka Streams: A library for building real-time streaming applications.

| Term | Definition |

|---|---|

| Producer | Application that writes data (messages) into Kafka topics. |

| Consumer | Application that reads data from Kafka topics. |

| Topic | A category or stream where records are published. |

| Partition | A subdivision of a topic for scalability and parallelism. |

| Broker | Kafka server that stores data and serves client requests. |

| Cluster | Multiple Kafka brokers working together. |

| ZooKeeper | (Legacy, being replaced by Kafka Raft) Used to manage brokers and metadata. |

| Offset | A unique ID for each message in a partition. |

How It Fits into the DataOps Lifecycle

The DataOps lifecycle includes stages like data ingestion, processing, orchestration, and delivery. Kafka supports:

- Ingestion: Producers collect data from sources (e.g., IoT devices, databases).

- Processing: Kafka Streams or consumers transform data in real time.

- Orchestration: Integrates with tools like Apache Airflow or Kubernetes for pipeline automation.

- Delivery: Consumers feed processed data to analytics platforms, dashboards, or data lakes.

Architecture & How It Works

Components and Internal Workflow

Kafka’s architecture is designed for distributed, fault-tolerant data streaming:

- Brokers: Form a Kafka cluster, storing and replicating data across nodes.

- Topics and Partitions: Data is organized into topics, with partitions enabling parallel processing.

- Producers and Consumers: Producers write to topics, while consumers read from them using offsets.

- ZooKeeper: Manages cluster coordination, leader election, and configuration (though Kafka 3.3+ supports KRaft for ZooKeeper-less operation).

Workflow:

- Producers send records to a topic.

- Brokers store records in partitions, replicating them for fault tolerance.

- Consumers subscribe to topics, pulling records based on offsets.

- Kafka ensures durability by persisting data to disk and replicating across brokers.

Architecture Diagram Description

Since images cannot be included, imagine a diagram with:

- A cluster of Kafka brokers (nodes) connected via a network.

- Topics split into partitions, distributed across brokers.

- Producers (e.g., IoT devices, apps) pushing data to topics.

- Consumers (e.g., analytics platforms) pulling data from partitions.

- ZooKeeper (or KRaft) managing the cluster metadata.

Integration Points with CI/CD or Cloud Tools

Kafka integrates seamlessly with DataOps tools:

- CI/CD: Jenkins or GitLab pipelines automate Kafka topic creation or schema updates using tools like Confluent Schema Registry.

- Cloud Tools: AWS MSK, Confluent Cloud, or Azure Event Hubs for managed Kafka deployments.

- Orchestration: Kubernetes for scaling Kafka clusters, Apache Airflow for pipeline scheduling.

- Monitoring: Prometheus and Grafana for real-time metrics on throughput and latency.

Installation & Getting Started

Basic Setup or Prerequisites

To set up Kafka locally:

- Requirements: Java 8+, ZooKeeper (or KRaft for Kafka 3.3+), 4GB RAM, 10GB disk space.

- Operating System: Linux, macOS, or Windows.

- Tools: Download Kafka from apache.org.

Hands-On: Step-by-Step Beginner-Friendly Setup Guide

This guide sets up Kafka on a local machine (Linux/macOS).

- Download Kafka:

wget https://downloads.apache.org/kafka/3.6.0/kafka_2.13-3.6.0.tgz

tar -xzf kafka_2.13-3.6.0.tgz

cd kafka_2.13-3.6.02. Start ZooKeeper:

bin/zookeeper-server-start.sh config/zookeeper.properties3. Start Kafka Broker:

In a new terminal:

bin/kafka-server-start.sh config/server.properties4. Create a Topic:

bin/kafka-topics.sh --create --topic my-topic --bootstrap-server localhost:9092 --partitions 3 --replication-factor 15. Produce Messages:

bin/kafka-console-producer.sh --topic my-topic --bootstrap-server localhost:9092Type messages (e.g., “Hello, Kafka!”) and press Enter.

6. Consume Messages:

In a new terminal:

bin/kafka-console-consumer.sh --topic my-topic --from-beginning --bootstrap-server localhost:90927. Verify Output: You should see the messages you typed in the consumer terminal.

Note: For production, configure server.properties for replication, retention policies, and security.

Real-World Use Cases

Kafka shines in DataOps for real-time data processing. Here are four scenarios:

- Real-Time Analytics (E-commerce):

- Scenario: An e-commerce platform tracks user clicks, purchases, and inventory in real time.

- Kafka Role: Producers send clickstream data to a Kafka topic. Consumers process it for personalized recommendations or inventory updates.

- Example: Amazon uses Kafka to process user activity for real-time pricing and recommendations.

- Log Aggregation (DevOps):

- Scenario: A DevOps team aggregates logs from microservices for monitoring.

- Kafka Role: Microservices publish logs to Kafka, and consumers feed them to Elasticsearch for analysis.

- Example: Netflix uses Kafka to collect and process application logs across its infrastructure.

- IoT Data Processing (Manufacturing):

- Scenario: A factory monitors sensor data from machines to predict maintenance needs.

- Kafka Role: Sensors produce data to Kafka topics, and Kafka Streams processes it for anomaly detection.

- Example: Siemens leverages Kafka for real-time IoT data in smart factories.

- Event-Driven Microservices (Finance):

- Scenario: A bank processes transactions in real time for fraud detection.

- Kafka Role: Transaction events are published to Kafka, with consumers triggering fraud alerts.

- Example: PayPal uses Kafka for real-time transaction processing.

Benefits & Limitations

Key Advantages

- Scalability: Handles millions of messages per second by adding brokers or partitions.

- Fault Tolerance: Data replication ensures no data loss during failures.

- Real-Time Processing: Enables low-latency data pipelines for analytics.

- Ecosystem: Kafka Connect and Streams simplify integration and processing.

Common Challenges or Limitations

- Complexity: Managing a Kafka cluster requires expertise in distributed systems.

- Resource Intensive: High-throughput setups demand significant CPU and storage.

- Latency: Not ideal for sub-millisecond latency requirements (e.g., high-frequency trading).

- Learning Curve: Understanding offsets, consumer groups, and configurations can be challenging.

Best Practices & Recommendations

Security Tips

- Enable SSL/TLS: Encrypt data in transit using SSL in

server.properties.security.protocol=SSL ssl.keystore.location=/path/to/keystore - Use ACLs: Restrict topic access with Access Control Lists (ACLs).

- Authentication: Implement SASL/PLAIN or Kerberos for user authentication.

Performance

- Optimize Partitions: Use 10–100 partitions per topic for balanced throughput.

- Tune Retention: Set

log.retention.hoursorlog.retention.bytesto manage disk usage. - Monitor Metrics: Use Prometheus to track broker health and latency.

Maintenance

- Regular Upgrades: Keep Kafka and ZooKeeper updated for security patches.

- Backup Metadata: Regularly back up ZooKeeper or KRaft metadata.

- Automate Scaling: Use Kubernetes for dynamic broker scaling.

Compliance Alignment

- GDPR/CCPA: Configure retention policies to delete sensitive data after a set period.

- Audit Logs: Enable Kafka’s audit logging for compliance tracking.

Automation Ideas

- CI/CD Integration: Automate topic creation using Kafka’s AdminClient API.

- Schema Management: Use Confluent Schema Registry for schema evolution.

Comparison with Alternatives

| Feature | Apache Kafka | RabbitMQ | AWS SQS | Apache Pulsar |

|---|---|---|---|---|

| Type | Distributed streaming platform | Message broker | Cloud-based queue | Distributed messaging/streaming |

| Scalability | High (partitions, brokers) | Moderate | High (cloud-managed) | High (segmented storage) |

| Latency | Low (milliseconds) | Low | Low | Low |

| Durability | Persistent storage | Persistent or transient | Persistent | Persistent |

| Ecosystem | Kafka Connect, Streams | Limited streaming | Limited (AWS-specific) | Pulsar Functions |

| Use Case | Real-time streaming, DataOps | Task queues, messaging | Simple queues | Streaming, multi-tenancy |

When to Choose Kafka

- Choose Kafka: For large-scale, real-time streaming, event-driven architectures, or DataOps pipelines requiring durability and scalability.

- Choose Alternatives: RabbitMQ for simpler task queues, SQS for cloud-native simplicity, or Pulsar for multi-tenancy and tiered storage.

Conclusion

Apache Kafka is a powerful tool for DataOps, enabling real-time data pipelines, scalability, and integration with modern data ecosystems. Its ability to handle high-throughput, fault-tolerant streaming makes it ideal for industries like e-commerce, finance, and IoT. While it has a learning curve and operational complexity, following best practices ensures robust deployments.

Future Trends

- KRaft Adoption: Kafka’s ZooKeeper-less mode (KRaft) will simplify cluster management.

- Cloud-Native Kafka: Managed services like Confluent Cloud and AWS MSK are gaining traction.

- AI Integration: Kafka is increasingly used to feed real-time data to AI/ML models.